Simple Text Classification using Keras Deep Learning Python Library – Step By Step Guide

Deep Learning is everywhere. All organizations big or small, trying to leverage the technology and invent some cool solutions. In this article, we will do a text classification using Keras which is a Deep Learning Python Library.

Why Keras?

There are many deep learning frameworks available in the market like TensorFlow, Theano. So why do I prefer Keras? Well, the most important reason is its Simplicity. Keras is a top-level API library where you can use any framework as your backend.

By default it recommends TensorFlow. So, in short, you get the power of your favorite deep learning framework and you keep the learning curve to minimal. Keras is easy to learn and easy to use.

Text Classification Using Keras:

Let’s see step by step:

Softwares used

- Python 3.6.5

- Keras 2.1.6 (with TensorFlow backend)

- PyCharm Community Edition

Along with this, I have also installed a few needed python packages like numpy, scipy, scikit-learn, pandas, etc.

Preparing Dataset

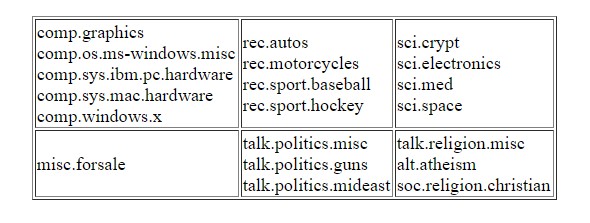

For our demonstration purpose, we will use 20 Newsgroups data set. Which is freely available over the internet. The data is categorized into 20 categories and our job will be to predict the categories. Few of the categories are very closely related. As shown below:

Generally, for deep learning, we split training and test data. We will do that in our code, apart from that we will also keep a couple of files aside so we can feed that unseen data to our model for actual prediction. We have separated data into 2 directories 20news-bydate-train and 20news-bydate-test

Importing Required Packages

|

1 2 3 4 5 6 7 8 9 |

import pandas as pd import numpy as np import pickle from keras.preprocessing.text import Tokenizer from keras.models import Sequential from keras.layers import Activation, Dense, Dropout from sklearn.preprocessing import LabelBinarizer import sklearn.datasets as skds from pathlib import Path |

Loading data from files to Python variables

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# For reproducibility np.random.seed(1237) # Source file directory path_train = "C:\\DL\\20news-bydate\\20news-bydate-train" files_train = skds.load_files(path_train,load_content=False) label_index = files_train.target label_names = files_train.target_names labelled_files = files_train.filenames data_tags = ["filename","category","news"] data_list = [] # Read and add data from file to a list i=0 for f in labelled_files: data_list.append((f,label_names[label_index[i]],Path(f).read_text())) i += 1 # We have training data available as dictionary filename, category, data data = pd.DataFrame.from_records(data_list, columns=data_tags) |

In our case data is not available as CSV. We have a text data file and the directory in which the file is kept in our label or category. So we will first iterate through the directory structure and create data set that can be further utilized in training our model.

We will use scikit-learn load_files method. This method can give us raw data as well as the labels and label indices. For our example, we will not load data at one go. We will iterate over files and prepare a DataFrame

At the end of the above code, we will have a data frame that has a filename, category, actual data.

Note: The above approach to make data available for training worked, as its volume is not huge. If you need to train on a huge dataset then you have to consider the BatchGenerator approach. In this approach, the data will be fed to your model in small batches.

Split Data for Train and Test

|

1 2 3 4 5 6 7 8 9 10 |

# lets take 80% data as training and remaining 20% for test. train_size = int(len(data) * .8) train_posts = data['news'][:train_size] train_tags = data['category'][:train_size] train_files_names = data['filename'][:train_size] test_posts = data['news'][train_size:] test_tags = data['category'][train_size:] test_files_names = data['filename'][train_size:] |

We will keep 80% of our data for training and the remaining 20% for testing and validations.

Tokenize and Prepare Vocabulary

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# 20 news groups num_labels = 20 vocab_size = 15000 batch_size = 100 # define Tokenizer with Vocab Size tokenizer = Tokenizer(num_words=vocab_size) tokenizer.fit_on_texts(train_posts) x_train = tokenizer.texts_to_matrix(train_posts, mode='tfidf') x_test = tokenizer.texts_to_matrix(test_posts, mode='tfidf') encoder = LabelBinarizer() encoder.fit(train_tags) y_train = encoder.transform(train_tags) y_test = encoder.transform(test_tags) |

When we classify texts we first pre-process the text using Bag Of Words method. Now the Keras comes with inbuilt Tokenizer which can be used to convert your text into a numeric vector. The text_to_matrix method above does exactly the same.

Pre-processing Output Labels / Classes

As we have converted our text to numeric vectors, we also need to make sure our labels are represented in the numeric format accepted by the neural network model. The prediction is all about assigning the probability to each label. We need to convert our labels to one hot vector

scikit-learn has a LabelBinarizer class which makes it easy to build these one-hot vectors.

|

1 2 3 4 |

encoder = LabelBinarizer() encoder.fit(train_tags) y_train = encoder.transform(train_tags) y_test = encoder.transform(test_tags) |

Build Keras Model and Fit

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

model = Sequential() model.add(Dense(512, input_shape=(vocab_size,))) model.add(Activation('relu')) model.add(Dropout(0.3)) model.add(Dense(512)) model.add(Activation('relu')) model.add(Dropout(0.3)) model.add(Dense(num_labels)) model.add(Activation('softmax')) model.summary() model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) history = model.fit(x_train, y_train, batch_size=batch_size, epochs=30, verbose=1, validation_split=0.1) |

Keras Sequential model API lets us easily define our model. It provides easy configuration for the shape of our input data and the type of layers that make up our model. I came up with the above model after some trials with vocab size, epochs, and Dropout layers.

Here is some snippet of fit and test accuracy

|

1 2 3 4 5 6 7 8 9 10 11 12 |

100/8145 [..............................] - ETA: 31s - loss: 1.0746e-04 - acc: 1.0000 200/8145 [..............................] - ETA: 31s - loss: 0.0186 - acc: 0.9950 300/8145 [>.............................] - ETA: 35s - loss: 0.0125 - acc: 0.9967 400/8145 [>.............................] - ETA: 32s - loss: 0.0094 - acc: 0.9975 500/8145 [>.............................] - ETA: 30s - loss: 0.0153 - acc: 0.9960 ... 7900/8145 [============================>.] - ETA: 0s - loss: 0.1256 - acc: 0.9854 8000/8145 [============================>.] - ETA: 0s - loss: 0.1261 - acc: 0.9855 8100/8145 [============================>.] - ETA: 0s - loss: 0.1285 - acc: 0.9854 8145/8145 [==============================] - 29s 4ms/step - loss: 0.1293 - acc: 0.9854 - val_loss: 1.0597 - val_acc: 0.8742 Test accuracy: 0.8767123321648251 |

Evaluate model

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

score = model.evaluate(x_test, y_test, batch_size=batch_size, verbose=1) print('Test accuracy:', score[1]) text_labels = encoder.classes_ for i in range(10): prediction = model.predict(np.array([x_test[i]])) predicted_label = text_labels[np.argmax(prediction[0])] print(test_files_names.iloc[i]) print('Actual label:' + test_tags.iloc[i]) print("Predicted label: " + predicted_label) |

After the Fit methods train our data set, we will evaluate our model as shown above. Also above we tried to predict a few files from the test set. The text_labels are generated by our LabelBinarizer

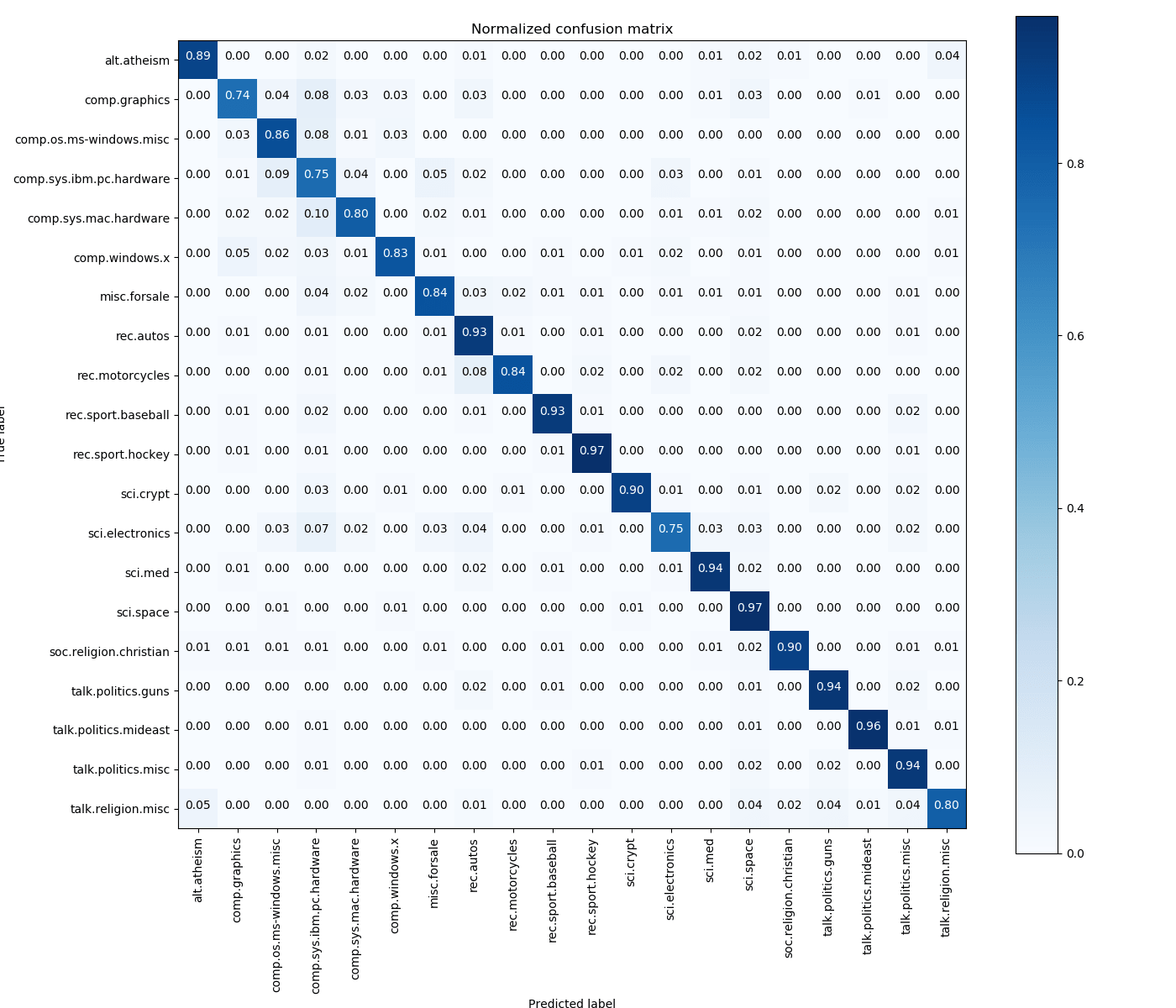

Confusion Matrix

The confusion matrix is one of the best ways to visualize the accuracy of your model. Check below the matrix from our training:

Saving the Model

Usually, the use case for deep learning is like training of data happens in different session and prediction happens using the trained model. The below code saves the model as well as tokenizer. We have to save our tokenizer because it is our vocabulary. The same tokenizer and vocabulary have to be used for accurate prediction.

|

1 2 3 4 5 6 |

# creates a HDF5 file 'my_model.h5' model.model.save('my_model.h5') # Save Tokenizer i.e. Vocabulary with open('tokenizer.pickle', 'wb') as handle: pickle.dump(tokenizer, handle, protocol=pickle.HIGHEST_PROTOCOL) |

Keras doesn’t have any utility method to save Tokenizer along with the model. We have to serialize it separately.

Loading the Keras model

|

1 2 3 4 5 6 7 |

# load our saved model model = load_model('my_model.h5') # load tokenizer tokenizer = Tokenizer() with open('tokenizer.pickle', 'rb') as handle: tokenizer = pickle.load(handle) |

The prediction environment also needs to be aware of the labels and that too in the exact order they were encoded. You can get it and store for future reference using

|

1 |

encoder.classes_ #LabelBinarizer |

Prediction

As mentioned earlier, we have set aside a couple of files for actual testing. We will read them, tokenize using our loaded tokenizer and predict the probable category

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

# These are the labels we stored from our training # The order is very important here. labels = np.array(['alt.atheism', 'comp.graphics', 'comp.os.ms-windows.misc', 'comp.sys.ibm.pc.hardware', 'comp.sys.mac.hardware', 'comp.windows.x', 'misc.forsale', 'rec.autos', 'rec.motorcycles', 'rec.sport.baseball', 'rec.sport.hockey', 'sci.crypt', 'sci.electronics', 'sci.med', 'sci.space', 'soc.religion.christian', 'talk.politics.guns', 'talk.politics.mideast', 'talk.politics.misc', 'talk.religion.misc']) test_files = ["C:\\DL\\20news-bydate\\20news-bydate-test\\comp.graphics\\38758", "C:\\DL\\20news-bydate\\20news-bydate-test\\misc.forsale\\76115", "C:\\DL\\20news-bydate\\20news-bydate-test\\soc.religion.christian\\21329" ] x_data = [] for t_f in test_files: t_f_data = Path(t_f).read_text() x_data.append(t_f_data) x_data_series = pd.Series(x_data) x_tokenized = tokenizer.texts_to_matrix(x_data_series, mode='tfidf') i=0 for x_t in x_tokenized: prediction = model.predict(np.array([x_t])) predicted_label = labels[np.argmax(prediction[0])] print("File ->", test_files[i], "Predicted label: " + predicted_label) i += 1 |

Output

|

1 2 3 |

File -> C:\DL\20news-bydate\20news-bydate-test\comp.graphics\38758 Predicted label: comp.graphics File -> C:\DL\20news-bydate\20news-bydate-test\misc.forsale\76115 Predicted label: misc.forsale File -> C:\DL\20news-bydate\20news-bydate-test\soc.religion.christian\21329 Predicted label: soc.religion.christian |

As we know the directory name is the true label for the file, the prediction above is accurate.

Conclusion

In this article, we’ve built a simple yet powerful neural network by using the Keras Python library. We have also seen how easy it is to load the saved model and do the prediction for completely unseen data.

The complete source code is available to download from our GitHub repo.

Download Code

Appreciate the tutorial, but I think the results are misleading because the headers, footers, and quotes were probably not removed from the data. They contain things like subject lines and email addresses which the neural network would leverage for prediction rather than using the content. If you scrub those from the content, accuracy will drop below 70%.

Hi Pavan, what type of Neural Network have you used in this example?

I believe its Multilayer Perceptron.

Hi,

First of all, thanks for the amazing code. It was really useful.

I do have a question: the text dataset I am working with could correspond to several labels, not just one. Is it possible to carry out this analysis with this code? If so, what type od change should I do?

I am getting a shape error while predicting a single text

When I run the code build eras and fit I got error:

ValueError: Error when checking target: expected activation_3 to have shape (20,) but got array with shape (21,) , please help.

Hi

Thank you very much for this tutorial.

I have a question about the saving model in Keras. I applied deep learning model and word2vec to classify some texts. However, I am not sure how I could use this again model after save it.

Hello,

Thanks for sharing this article with us. I came with one question while looking at the code. I hope you can help me with my question.

What will be the advantage of the LSTM if we apply tfidf on the data itself?

I mean with tfidf we will miss the order of the data and so how the LSTM or any time distributed model can help us with it?

ValueError Traceback (most recent call last)

in ()

—-> 1 x_train = tokenizer.texts_to_matrix(train_posts, mode=’tfidf’)

2 x_test = tokenizer.texts_to_matrix(test_posts, mode=’tfidf’)

~\Anaconda3\lib\site-packages\keras_preprocessing\text.py in texts_to_matrix(self, texts, mode)

378 “””

379 sequences = self.texts_to_sequences(texts)

–> 380 return self.sequences_to_matrix(sequences, mode=mode)

381

382 def sequences_to_matrix(self, sequences, mode=’binary’):

~\Anaconda3\lib\site-packages\keras_preprocessing\text.py in sequences_to_matrix(self, sequences, mode)

405

406 if mode == ‘tfidf’ and not self.document_count:

–> 407 raise ValueError(‘Fit the Tokenizer on some data ‘

408 ‘before using tfidf mode.’)

409

ValueError: Fit the Tokenizer on some data before using tfidf mode.

I followed the code exactly and got this error. any idea what this means?

Hi Kaleb,

Can you check that in your code you are calling fit_on_texts for tokenizer and it indeed fitting on some data. May be you have empty data set on which its trying to fit.

I don’t think this code will work for all types of data. The one hot encoding changes the shape of the features and the target and it throws this error, “ValueError: Input arrays should have the same number of samples as target arrays. Found 1 input samples and 108 target samples.”, when I try to train the data. I loaded my data from csv files into dataframe instead of loading files.

Thanks for the code, this is one the article where someone start with own data, not from Keras/Tensorflow dataset which is already pre-processed, I was struggling with sci-kit learn vectorization and it help me a lot to understand pre-processing.

Hi Vineet,

Glad you found this useful.

method read_text() may need encoding=’cp1252′ as attribute

Thank you very much. I was adding it to the load_files function and it would not work.

When we loading he model we should import the load_model func

from keras.models import load_model

Otherwise it wouldn’t work

can you provide the code that generated the confusion matrix? Thanks!

Hi Hajer,

Please refer file – 20_news_group_classification_with_cnf_matrix.py

In this file the function

plot_confusion_matrixplots the matrix.The above files are available in the Github repository